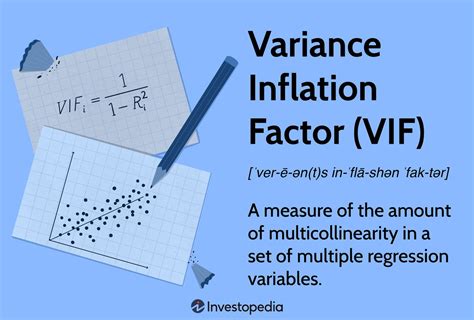

The Variance Inflation Factor (VIF) is a statistical measure used to diagnose multicollinearity in regression analysis. It quantifies the degree to which a predictor variable is correlated with other predictor variables in the model. In essence, VIF measures how much the variance of a regression coefficient is inflated due to multicollinearity. This concept is crucial in regression modeling because high multicollinearity can lead to unstable estimates of regression coefficients, making it challenging to interpret the results accurately.

Understanding VIF requires a basic knowledge of regression analysis and the issues associated with multicollinearity. When predictor variables are highly correlated, it becomes difficult for the model to uniquely estimate the contribution of each variable to the response variable. This situation can result in large standard errors for the regression coefficients, reducing the precision of the estimates. The VIF helps in identifying which predictor variables are involved in multicollinearity, allowing for corrective actions such as variable selection or dimensionality reduction.

Key Points

- VIF is a measure used to detect multicollinearity among predictor variables in a regression model.

- A high VIF value indicates that a predictor variable is highly correlated with one or more other predictor variables.

- The general rule of thumb is that a VIF greater than 5 or 10 indicates high multicollinearity, though the exact threshold can vary depending on the context.

- Correcting for multicollinearity might involve removing correlated variables, using dimensionality reduction techniques, or applying regularization methods.

- Interpreting VIF values requires considering the context of the analysis, including the research question, data characteristics, and the implications of multicollinearity on the model's interpretability and predictive performance.

Calculating Variance Inflation Factor

The calculation of VIF involves the following formula: VIF = 1 / (1 - R^2), where R^2 is the coefficient of determination from the regression of a predictor variable on all other predictor variables. Essentially, for each predictor variable, a separate regression model is run where that variable is the response, and all other predictor variables are the predictors. The R^2 from this model is then used to calculate the VIF for that predictor variable.

Given that VIF calculation is dependent on R^2, it's clear that a high R^2 value (close to 1) indicates that the predictor variable is well-explained by other predictor variables, hence suggesting high multicollinearity. Conversely, a low R^2 value (close to 0) indicates that the predictor variable is not well-explained by other predictor variables, suggesting low multicollinearity.

Interpreting VIF Values

Interpreting VIF values is somewhat subjective and depends on the context of the analysis. However, as a general guideline, VIF values are interpreted as follows:

- VIF = 1 indicates no multicollinearity.

- VIF between 1 and 5 suggests moderate multicollinearity.

- VIF greater than 5 or 10 indicates high multicollinearity.

It’s worth noting that the choice of threshold (5 or 10) can depend on the specific requirements of the analysis and the field of study. In some cases, even moderate multicollinearity may be problematic, especially if the goal is to obtain precise estimates of the regression coefficients.

| VIF Value | Interpretation |

|---|---|

| 1 | No multicollinearity |

| 1-5 | Moderate multicollinearity |

| >5 or 10 | High multicollinearity |

Addressing Multicollinearity

Upon identifying high multicollinearity through VIF, several strategies can be employed to address the issue. These include:

- Variable Selection: Removing one or more of the highly correlated variables from the model. This approach requires careful consideration to ensure that the removed variables do not significantly contribute to the explanation of the response variable.

- Dimensionality Reduction: Techniques such as Principal Component Analysis (PCA) can be used to reduce the number of predictor variables by combining highly correlated variables into a single component.

- Regularization Methods: Techniques like Ridge regression and Lasso regression can be used. These methods add a penalty term to the cost function to reduce the magnitude of the regression coefficients, which can help mitigate the effects of multicollinearity.

Conclusion and Future Directions

In conclusion, the Variance Inflation Factor is a vital diagnostic tool in regression analysis, helping to identify and address multicollinearity among predictor variables. Understanding and interpreting VIF values requires a nuanced approach, considering both the statistical implications of multicollinearity and the practical context of the analysis. As data becomes increasingly complex and multidimensional, the importance of diagnosing and addressing multicollinearity will continue to grow, underscoring the need for robust statistical methods and careful consideration of the underlying data structure.

What is the primary purpose of calculating the Variance Inflation Factor (VIF) in regression analysis?

+The primary purpose of calculating VIF is to diagnose multicollinearity among predictor variables. Multicollinearity can lead to unstable estimates of regression coefficients, making it challenging to interpret the results accurately.

How do you interpret VIF values in the context of regression analysis?

+VIF values are interpreted based on their magnitude, with values closer to 1 indicating no multicollinearity, values between 1 and 5 suggesting moderate multicollinearity, and values greater than 5 or 10 indicating high multicollinearity. The exact threshold for concern can vary depending on the context and field of study.

What strategies can be employed to address multicollinearity identified through VIF analysis?

+Strategies to address multicollinearity include variable selection (removing highly correlated variables), dimensionality reduction techniques (such as PCA), and regularization methods (like Ridge and Lasso regression). The choice of strategy depends on the research question, data characteristics, and the implications of multicollinearity on model interpretability and predictive performance.