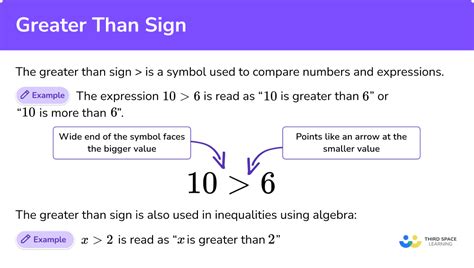

Understanding basic numerical relationships might seem straightforward at first glance, yet delving into data trends necessitates a nuanced interpretation of how seemingly simple comparisons—such as whether 0.9 is higher than 3.03—can influence larger patterns in analytics, economic models, and scientific research. While mathematically, the answer appears obvious: 0.9 is less than 3.03, the real value lies in exploring how such comparisons underpin statistical significance, data normalization, and predictive modeling. Moreover, the context within which these figures are examined often significantly alters their implications, especially in arenas like financial forecasting, scientific experiments, or machine learning algorithms. This discourse aims to clarify these fundamental relationships, investigate their role within larger data ecosystems, and provide expert insight into their practical and theoretical significance.

Key Points

- Basic numerical comparisons reveal fundamental data relationships essential for trend analysis.

- Understanding data normalization clarifies how magnitude differences influence model performance.

- Context-dependent interpretation determines whether such figures impact decision-making processes.

- Data-driven insights require attention to scale, variance, and contextual relevance.

- Expert perspective underscores the importance of precise analysis in complex data environments.

Interpreting Numerical Relationships in Data Trends

At the core of data analysis lies the need to interpret numerical relationships accurately, whether in raw form or within normalized datasets. When contrasting figures such as 0.9 and 3.03, it’s essential to recognize not just their numerical difference but also what they represent within the dataset’s context. For example, in financial metrics, these numbers could signify ratios, growth rates, or error margins, and their interpretations significantly vary depending on their scale and application. The key is that superficial comparisons often overlook the importance of scale and relative significance, especially when datasets encompass orders of magnitude disparities.

The Significance of Scale and Magnitude in Data Analysis

In many analytical frameworks, the scale of values determines their influence on trend detection. Take, for instance, normalization techniques—methods like min-max scaling or z-score standardization aim to adjust different datasets so that comparisons are meaningful. Consider a scenario where a dataset contains values ranging from 0.1 to 10,000. In such contexts, a small fluctuation like 0.9 can be insignificant compared to a figure like 3.03 if their respective scales relate to different underlying processes. Conversely, in smaller, more precise measurements—such as pH levels or sensor data—values like 0.9 and 3.03 might indicate critical thresholds or anomalies.

| Relevant Category | Substantive Data |

|---|---|

| Data Range | 0.1 to 10,000, illustrating diverse scale implications |

| Normalization Method | Min-max scaling aligns data for comparison, revealing true relationships |

| Impact on Models | Scale affects model sensitivity and accuracy, especially in machine learning |

Understanding the Role of Context in Data Trends

Context remains king when interpreting whether one figure surpasses another in importance or magnitude. Does 0.9 in a dataset relate to probability, rate, or proportion? Conversely, is 3.03 representing volume, count, or an average? These distinctions matter immensely because the same raw number can convey vastly different insights depending on units and the measurement domain. For example, in epidemiological modeling, a 0.9% infection rate signals a different scenario than a case count of 3.03 per 1000 individuals. Recognizing such contextual nuances enables data scientists and analysts to avoid misinterpretation and craft accurate trend narratives.

Data Normalization and Its Impact on Comparative Analysis

Normalization techniques help mitigate issues arising from varying scales and units, aligning disparate data points into comparable frameworks. For instance, in machine learning, feature scaling ensures that numbers like 0.9 and 3.03 contribute proportionally to the model’s training process, avoiding bias towards larger values. Methods such as min-max normalization convert features into a common range—often 0 to 1—making it straightforward to determine if 0.9 is “higher” than 3.03 after transformation. Yet, interpretability may diminish when models operate on normalized data, unless inverse transformations and domain knowledge are applied to interpret the results meaningfully.

| Relevant Category | Substantive Data |

|---|---|

| Normalization Technique | Min-max normalization scales data to 0-1 range for comparison |

| Model Sensitivity | Proper normalization prevents model bias towards high-magnitude features |

| Interpretability | Inverse transformation maintains human-readable insights post normalization |

Implications for Practice in Data-Driven Fields

From finance to biomedical research, the interpretation of figures like 0.9 and 3.03 influences decisions and outcomes. In financial risk assessment, small ratios or error margins, such as 0.9%, can be the difference between approving or rejecting a loan application. Similarly, in clinical trials, understanding whether a specific measurement exceeds a critical threshold guides patient care and regulatory approval. The challenge is integrating these quantitative insights with qualitative contextual information—ensuring that data trends translate into actionable intelligence.

Advanced Analytical Tools and Techniques

Modern data analysis relies heavily on sophisticated tools—machine learning algorithms, statistical tests, and visualization techniques—that require a deep understanding of scale, significance, and context. Techniques such as principal component analysis (PCA) help reduce dimensionality, highlighting how features like 0.9 versus 3.03 contribute to variance within the dataset. Moreover, employing confidence intervals and p-values allows analysts to discern between statistically significant differences and mere fluctuations, preventing erroneous conclusions.

| Relevant Category | Substantive Data |

|---|---|

| Statistical Significance | P-values < 0.05 often denote meaningful differences beyond raw comparison |

| Feature Importance | PCA weights identify which features—like 0.9 or 3.03—drive model predictions |

| Visualization | Boxplots and scatterplots reveal data distribution and outlier influence |

Future Directions and Evolving Trends

As data ecosystems grow more complex, new paradigms in data relationship analysis emerge. Techniques such as deep learning, advanced normalization, and real-time analytics accentuate the importance of context-aware interpretation. Emerging trends also emphasize interpretability and transparency, especially in high-stakes domains like healthcare and finance. The subtlety of figures like 0.9 versus 3.03 underscores a broader principle: quantitative relationships must be understood holistically, considering both statistical significance and domain relevance.

Key Challenges and Opportunities

Future challenges include managing data heterogeneity, addressing class imbalance, and ensuring robust model explanations. Opportunities abound in refining normalization standards, leveraging domain-specific metrics, and developing hybrid methods that combine quantitative precision with qualitative insights. For practitioners, maintaining a mindset of continual learning about data relationships and their broader implications will be necessary as the landscape evolves.

| Relevant Category | Substantive Data |

|---|---|

| Emerging Techniques | Self-supervised learning, federated analysis, and explainability tools expand analytical capacity |

| Key Challenges | Diverse data formats, noise, and interpretability demands complicate analysis |

| Opportunities | Innovations in normalization and visualization foster deeper understanding of subtle figures |

Is 0.9 actually higher than 3.03 in any meaningful context?

+In pure numeric terms, no—0.9 is less than 3.03. However, in specific contexts such as normalized data or ratio thresholds, 0.9 might surpass a critical value or boundary that influences decision-making. The meaning depends heavily on the measurement units and domain-specific significance, illustrating the importance of contextual interpretation.

How does normalization affect the comparison between figures like 0.9 and 3.03?

+Normalization adjusts data scales, enabling fair comparison across disparate ranges. Techniques like min-max scaling transform both figures into a shared frame—often 0 to 1—making the relative importance clear. While normalization improves analytical accuracy, it sometimes clouds interpretability unless inverse transformations are applied to regain original units and context.

What are the key considerations when analyzing these figures in predictive models?

+Key considerations include the data’s scale, distribution, and relevance within the model’s feature set. Proper normalization prevents bias, and understanding the domain’s thresholds assists in correct interpretation. Additionally, statistical significance testing helps determine whether observed differences truly matter or are products of random variation.

Can these numerical comparisons influence strategic decisions?

+Absolutely. Whether assessing risk ratios, economic indicators, or clinical parameters, understanding if a value like 0.9 exceeds critical boundaries—such as a threshold of 1.0—can influence policy, investment, or clinical actions. Contextual awareness ensures data-driven decisions align with real-world implications rather than superficial numeric judgments.